The problem

One of the increasingly interesting use cases for AR is the ability to have persistent virtual content appearing in real-life locations, such as informational graphics outside of major landmarks, or directional content along a route.

This use case is the core feature of grifgraf. Users on grifgraf post virtual graffiti onto real-life locations, which persist at those locations to be viewed by other users of the app.

This blog post describes the method grifgraf uses to fulfill this use case.

But first, I want to thank the folks at Movable Type for their fantastic article about Vincenty Solutions linked here. Their explanations and code were really helpful for this project.

Summary

We are dealing with two different sets of coordinates:

- 📍 Local coordinates: This refers to the rotation, X, Y and Z coordinates ARKit uses to position virtual content in relation to your phone's camera.

- 🌎 Geo coordinates: This refers to the latitude, longitude, altitude, and rotation values of virtual content

The technical challenges here are the following:

- 📍→🌎 Converting local coordinates into geo coordinates.

- 🌎→📍 Converting geo coordinates into local coordinates.

Precisely placing AR content in a real-life place requires deriving a distance in meters between two two-dimensional points on a sphere's surface, using the constant of the sphere's circumference. This is ultimately a geodesic problem - the earth is an oblong sphere (sorry flat earthers).

The most accurate geodesic formulae I found for calculating distances between points on the earth are the Vincenty formulae. The inverse problem solves, 🌎→📍, where the direct problem solves 📍→🌎.

Saving a gif of Columbo to this wall on Zhongshan Road in Taipei results in its local coordinates being converted into geo coordinates and saved to the server.

Those coordinates are later pulled from the server and converted to local coordinates when a user is nearby, where it's placed back into the spot it was saved back.

The math

The notation here maps to the notation found here.

🌎→📍 Inverse:

IV(Φu Lu, Φu Lg) = distance east (s1)

IV(Φu Lu, Φg Lu) = distance north (s2)

(Gx, Gy) = (Ux, Uy) + (s1, s2)

Given the user's lat/lon, and the graf's lat/lon, the distance east and north are derived.📍→🌎 Direct:

V(Φu Lu, s1) = (Φ1, Lg)

V(Φu Lu, s1) = (Φg, L1)

↓

(Φg, Lg)

Given the user's lat/lon, and the distances north and east to the graf, the lat/lon of the graf is derived.Implementation

Note that the local coords (📍) are tracked with Swift's SIMD3 type, and the global coords (🌎) are tracked with Swift's CLLocationCoordinate2D type.

Quaternion rotation is stored and tracked using eastUpSouthQTarget and stored using Swift's SIMD4 type. This post does not go into detail about that process.

🌎→📍 Inverse Vincenty

func coordsToPosition(_ coords: CLLocationCoordinate2D, _ deviceCoords: CLLocationCoordinate2D, _ deviceLocation: SIMD3<Float>) -> SIMD3<Float> {

var vert = vincentyDistance(la1: coords.latitude, lo1: coords.longitude, la2: deviceCoords.latitude, lo2: coords.longitude)

var horiz = vincentyDistance(la1: deviceCoords.latitude, lo1: deviceCoords.longitude, la2: deviceCoords.latitude, lo2: coords.longitude)

if (deviceCoords.longitude > coords.longitude) {

// User is east of the grafent

horiz *= -1

}

if (deviceCoords.latitude < coords.latitude) {

// User is south of the grafent

vert = -1

}

let horiz2= Float(horiz)

let vert2= Float(vert)

return SIMD3(x: horiz2 + deviceLocation.x, y: 0.0, z: vert2 + deviceLocation.z)

}📍→🌎 Direct

func positionToCoords(_ userCoords: CLLocationCoordinate2D, _ userPosition: SIMD3<Float>, _ position: SIMD3<Float>) -> CLLocationCoordinate2D {

let east = position.x - userPosition.x

let north = userPosition.z - position.z

let latCoord = vincentyLoc(la: userCoords.latitude, lo: userCoords.longitude, distance: Double(north), direction: 2)

let lonCoord = vincentyLoc(la: userCoords.latitude, lo: userCoords.longitude, distance: Double(east), direction: 0.5)

return CLLocationCoordinate2D(latitude: latCoord.latitude, longitude: lonCoord.longitude)

}

Unit tests

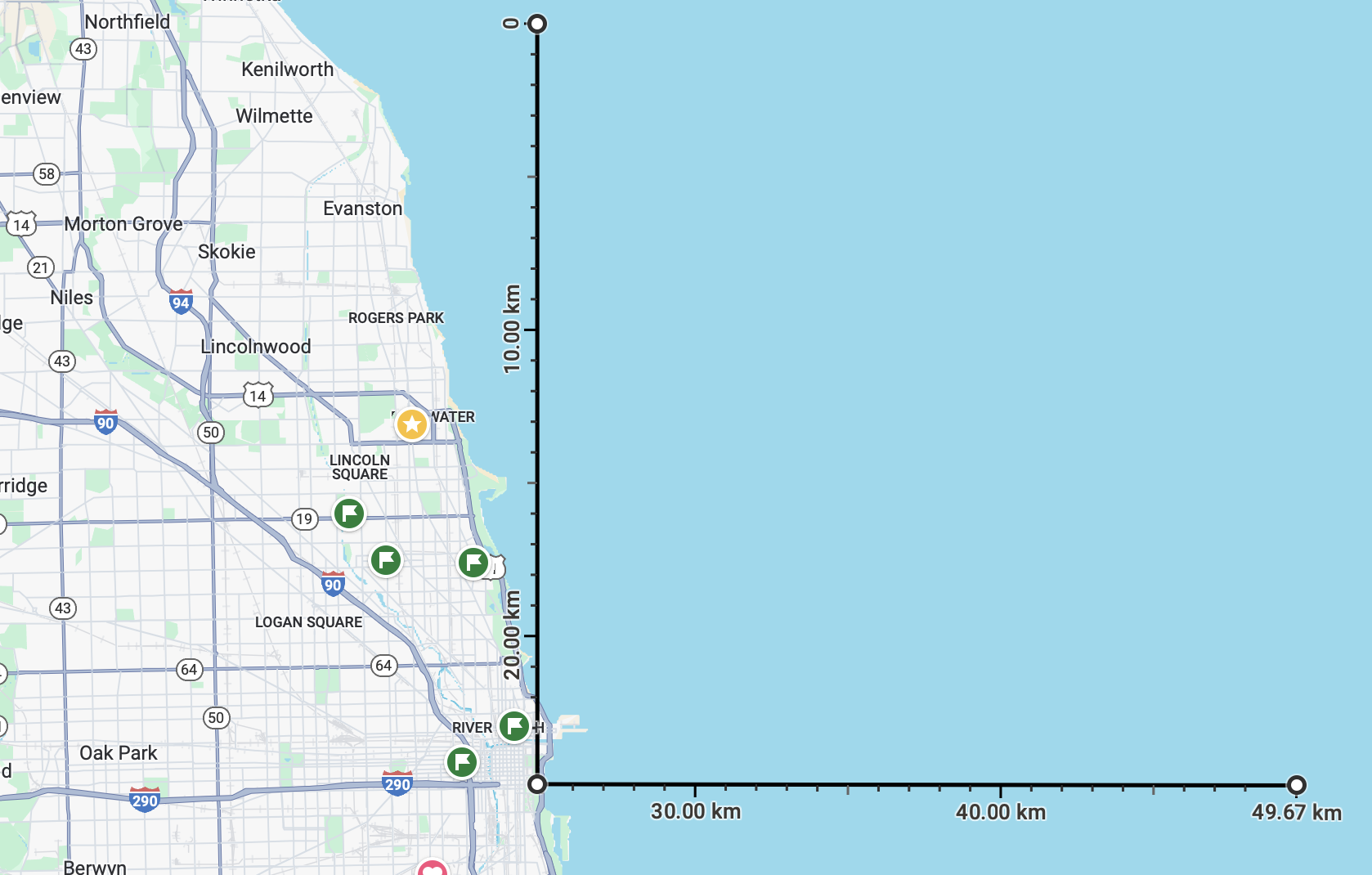

I used Buckingham Fountain in Chicago as the point of reference for unit testing.

let buckingham = CLLocationCoordinate2D(latitude: 41.87579360778173, longitude: -87.6189474796952)Sanity check for same coordinates and 0 distance:

func testSameCoordinates() throws {

// Should return the same coordinates back and forth

let userCoords = buckingham

// Buckingham fountain

let entCoords = userCoords

let pos = manager.vincentyDistance(la1: entCoords.latitude, lo1: entCoords.longitude, la2: userCoords.latitude, lo2: userCoords.longitude)

XCTAssertEqual(pos, 0)

let inverse = manager.vincentyLoc(la: userCoords.latitude, lo: userCoords.longitude, distance: 0, direction: 0)

XCTAssertEqual(userCoords.latitude, inverse.latitude)

XCTAssertEqual(userCoords.longitude, inverse.longitude)

}Testing that known coordinates 25km north of Buckingham Fountain are recognized as being 25km north, and that when that distance is added to the Buckingham coordinates, we get (very close to) those same coordinates back:

func testNorth() throws {

// Should find accurate location for north bearing

let coords = CLLocationCoordinate2D(latitude: 42.09917158204169, longitude: -87.6189474796952)

let pos = gem.vincentyDistance(la1: coords.latitude, lo1: coords.longitude, la2: buckingham.latitude, lo2: buckingham.longitude)

let inverse = gem.vincentyLoc(la: buckingham.latitude, lo: buckingham.longitude, distance: pos, direction: 2)

// Accurate to 9 decimal places. This is a fraction of an inch, we good

XCTAssertEqual(round(coords.longitude * 1000000000) / 1000000000.0, round(inverse.longitude * 1000000000) / 1000000000.0)

XCTAssertEqual(round(coords.latitude * 1000000000) / 1000000000.0, round(inverse.latitude * 1000000000) / 1000000000.0)

}

Testing that known coordinates 25km east of Buckingham Fountain are recognized as being 25km east, and that when that distance is added to the Buckingham coordinates, we get (very close to) those same coordinates back:

func testEast() throws {

// Should find accurate location for east bearing

let coords = CLLocationCoordinate2D(latitude: 41.875403244, longitude: -87.318948854)

let pos = gem.vincentyDistance(la1: coords.latitude, lo1: coords.longitude, la2: buckingham.latitude, lo2: buckingham.longitude)

let inverse = gem.vincentyLoc(la: buckingham.latitude, lo: buckingham.longitude, distance: pos, direction: 0.5)

// Accurate to 9 decimal places. This is a fraction of an inch, we good

XCTAssertEqual(round(coords.longitude * 1000000000) / 1000000000.0, round(inverse.longitude * 1000000000) / 1000000000.0)

XCTAssertEqual(round(coords.latitude * 1000000000) / 1000000000.0, round(inverse.latitude * 1000000000) / 1000000000.0)

}

Any coordinate pair can be plugged into these tests and they will pass.

Here's a google maps approximation of the points used in the unit test. Each test starts at the middle point (Buckingham Fountain) and measures the distance to a point in Lake Michigan. Plugging that distance and direction into vincentyDistance, it leaps to the correct point (with a small margin of error).

Other attempts

I'd like to talk about all the interesting hacks and workarounds I tried to make this work. Much effort went into experimenting to get this nice result you see above.

Initially, I serialized the arSession, saving it to geographic radius and uploaded it to a server. When a user entered the radius, the camera would say "point your phone at this area" with a captured image of that area, and then load the session from the internet.

It was accurate and reliable, but it sucked. It sucked because real graffiti doesn't wait for you to stand in front of it for a while to appear. It's not realistic, its inefficient. and it also would only work on iPhone.

My favorite attempt has to be my attempt at using OpenStreetMap to create invisible surfaces in the AR world that map precisely onto real life walls. This worked by grabbing the users immediate area in OSM, and turning any building nodes into square nodes that other AR content will stick to. If this worked, it would have been really really cool because it meant that you could actually identify certain walls and the art on them, and generate the entire world of graffiti as a 3D model in blender.

The downside would be that if you wanted to put a graf on something that wasn't on OSM, like an electrical box or temporary structure of some kind, you couldn't do it.

The contributors to OSM are doing fantastic work creating a detailed map of the real world and such data has many great use cases, but highly accurate geo-tracked AR experiences aren't one of them. The data just isn't accurate enough.